Make OpenShift console available on port 443 (https) [UPDATE]

The main reason why this blog post exist is that OpenShift V3 and Kubernetes is very close binded to port 8443. This could be changed in the future.

UPDATE:

Since OpenShift Enterprise 3.4 are both ports openshift_master_api_port and openshift_master_console_port documented

Configuring Master API and Console Ports.

I used several times a dedicated haproxy pod to provide access to the OpenShift v3 Web console and api on port 443 (https).

This concept could also be used for different services in the PaaS which are able to talk via SNI.

Since my previous post Make OpenShift console available on port 443 (https) OpenShift have evolved a lot so this makes the setup much easier.

Possibilities

You have now at least two options to solve this topic.

- OpenShift router image

- ME2Digital haproxy image

Both solution have there pro and cons

| / | OCP Router | ME2Digital |

|---|---|---|

| Support by | Red Hat | ME2Digital |

| HAProxy Version | 1.5 with RH patches | HAProxy.org 1.7 with lua |

| Use OCP / Kubernetes API | ✔️ | ➖ |

| Configurable via ENV | ✔️ | ✔️ |

| Configurable via configmaps | ✔️ | ✔️ |

Pre-requirement

- Time!

- Understanding of OpenShift v3, Kubernetes and docker

- SSL-Certificate for master-service. [optional]

- write access to a git repository

- ssh key for deployment [optional]

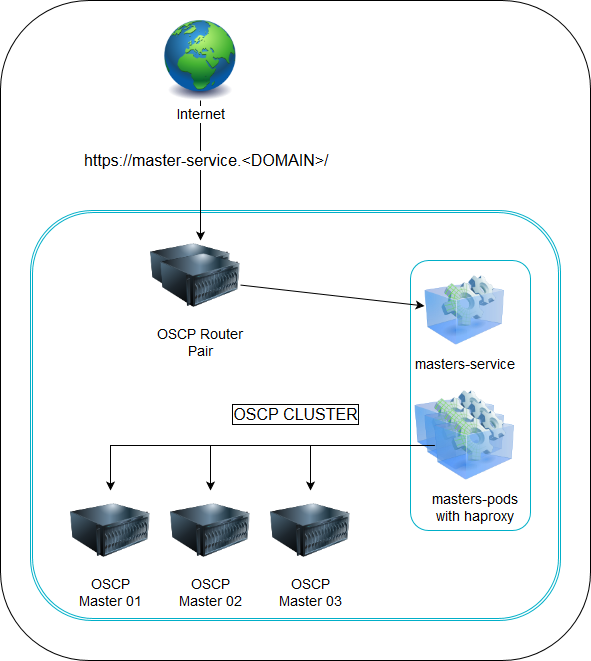

Picture

Steps

Due to the fact that the solution with the ME2Digital haproxy image is easier for my I will describe this solution.

create app

$ oc new-app me2digital/haproxy17 \

--name=masters-service \

-e TZ=Europe/Vienna \

-e STATS_PORT=1999 \

-e STATS_USER=aaa \

-e STATS_PASSWORD=bbb \

-e SERVICE_TCP_PORT=13443 \

-e SERVICE_NAME=kubernetes -e SERVICE_DEST_PORT=8443 \

-e SERVICE_DEST=kubernetes.default.svc.cluster.local \

-e DEBUG=true

There is a default haproxy config file which creates one entry for the kubernetes.default.svc.cluster.local destination.

You can use the name of the kubernetes service because of the fact that the default haproxy config file have DNS Resolving

setuped.

expose route

You have several options for the route.

passthrough

This route will use the OCP Master certificates from the OCP Master servers

oc create route passthrough masters-service --service=masters-service

reencrypt

For this route requiers the OCP Master certificate authority from the OCP Master servers

oc create route reencrypt masters-service --service=masters-service --dest-ca-cert=FILENAME

When you want to use another certificate then the wildcard one from the default router can you add the dedicated certificate on this command line or afterwards as described in Re-encryption Termination

configuration

You can use your own config file via a config map.

own config file

I use the following container-entrypoint.sh.

This script uses several environment variables. You can set the path to the configfile in the variable CONFIG_FILE after

the config map was created and mounted.

The default file is haproxy.conf.template

You can use this sequence for the setup

# oc create configmap haproxy-config \

--from-file=haproxy.config=<PATH_TO_CONFIGFILE>

# oc volume dc/masters-service --add \

--name=masters-service \

--mount-path=/mnt \

--type=configmap \

--configmap-name=haproxy-config

# oc env dc/masters-service CONFIG_FILE=/mnt/haproxy.config

own certificates

When you want to use your own certificates then you will need to add some secrets with the key(s) and certificate(s) into the project.